Tesla FSD Exploration: How This “Four-Wheeled Robot” Learned to Drive

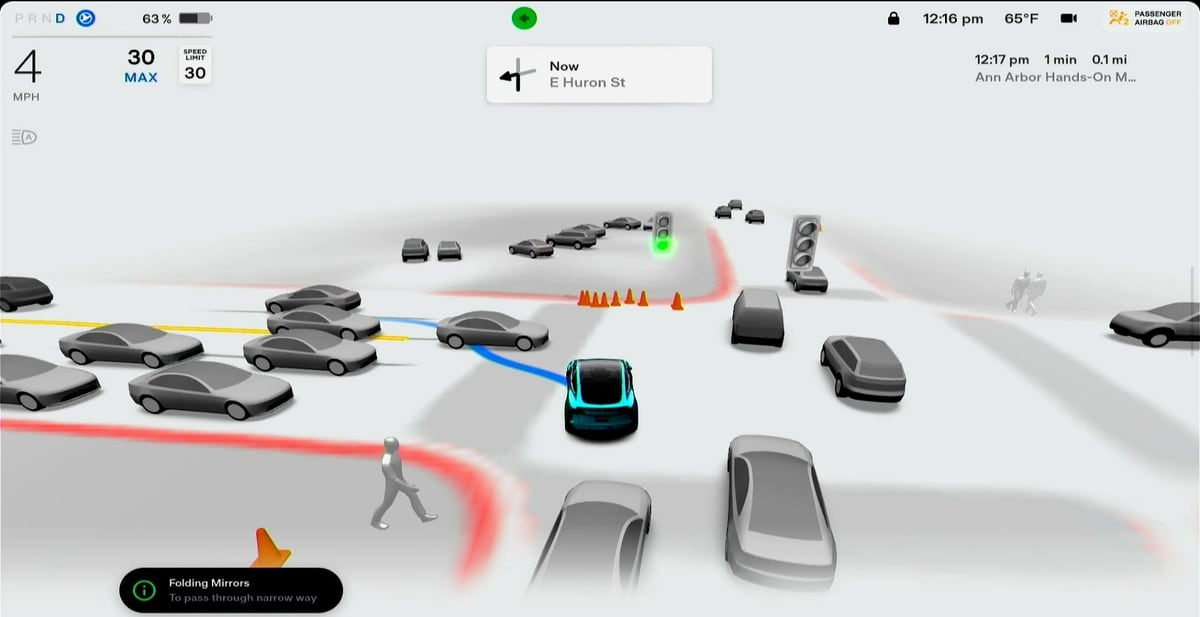

Imagine this: you’re sitting in the driver’s seat, hands off the wheel, as the car smoothly makes a left turn at a busy intersection, avoids a sudden lane-changing vehicle, and stops gently at a red light.

This isn’t a sci-fi movie — it’s the reality being shaped by Tesla’s Full Self-Driving (FSD) technology.

With the recent launch of the 2025 Tesla Model 3 in China, FSD is once again in the spotlight. But what’s the “magic” behind it? Let’s break down how Tesla turned a car into a “thinking driver.”

Catalog

🔄 From “Hard-Coded Rules” to “Learning by Example”

📜 The Old Era: 300,000 Lines of “Rigid Robot” Code

Before FSD V12, Tesla’s autonomous driving system worked like a robot that followed an enormous instruction manual. Engineers had to write countless rules, for example:

“If the traffic light ahead is red and distance is less than 25m, brake.”

“If a car is in the left lane within 50m, do not change lanes.”

“If a pedestrian is detected, slow to 20 km/h immediately.”

Sounds reasonable — but it’s fragile. FSD V11’s planning code alone had ~300,000 lines of C++! And what happens if the pedestrian is not on a crosswalk, or the light is 25.5m away? Every unusual case could confuse the system.

👀 The New Era: Learning From “Veteran Drivers”

Starting with V12, Tesla flipped the approach. Instead of telling AI “brake for a red light,” they let it watch millions of real driving clips from skilled human drivers. AI learned by imitation, just like a child learns to walk — no manual, just observation and practice.

📷 Tesla’s “Eyes”: Why 8 Cameras Are Enough

💡 The “Photons In, Control Out” Philosophy

While many competitors rely on expensive rooftop LiDAR (tens of thousands of dollars each), Tesla bets solely on cameras — because humans drive with two eyes, so AI should too.

Each Tesla carries 8 strategically placed cameras:

3 front-facing (left, center, right)

1 rear

2 on each side

🔬 Raw Light Data, Not Pretty Pictures

These aren’t normal cameras. Instead of processed, beautified images, they feed raw photon data directly to AI for accuracy.

Tesla even redesigned the camera filters: instead of the standard “red-green-green-blue,” they use “red-transparent-transparent-transparent,” which means:

Red channel: critical for detecting brake lights & traffic signals

Transparent channels: maximum light capture for better vision in low light or bad weather

📏 Measuring Distance Without LiDAR

During AI training, Tesla temporarily used LiDAR to provide “ground truth” distances. Over time, the AI learned to judge distance using only vision — just like humans develop depth perception.

🧠 Educating the AI: From Cloud School to Real-World Tests

🎓 Phase 1: Training in Data Centers

Tesla’s Cortex AI supercomputer trains FSD for months:

Time: continuous computation over several months

Power: hundreds of thousands of GPUs

Cost: tens of millions of dollars in electricity monthly

It studies millions of driving scenarios — sunny highways, rainy intersections, snowy nights — until it masters them all.

🚦 Phase 2: On-Road “Exams”

After training, updates are sent over-the-air to millions of Teslas. Onboard chips (e.g., HW4) then run “inference” 20–50 times per second:

Receive 8 camera feeds

Analyze road situation

Output steering, throttle, and braking commands

For reference: humans react in 0.5–1 second; FSD adjusts every 0.02–0.05 seconds.

📊 Data: Tesla’s True Moat

Tesla’s competitive edge is its massive driving dataset. Every day, ~5,000 new Teslas join the data network. Even with FSD off, cars operate in “shadow mode,” quietly comparing AI’s choices with the driver’s, and uploading useful cases.

With ~5 million cars worldwide acting as 24/7 driving instructors, Tesla collects the “just right” data — avoiding overly aggressive or overly cautious styles — a strategy known as the Goldilocks Principle.

🕹 Simulators & Specialist “Drivers”

🖥 Extreme Scenarios in Simulation

When rare events are hard to capture in real life, Tesla’s powerful simulator can:

Turn sunny weather into rain or snow

Simulate blinding sunlight

Create unusual traffic situations

👥 The “Mixture of Experts” (MoE) Model

FSD is like a team of specialized drivers:

City intersection expert

Highway expert

Bad-weather expert

Parking expert

Depending on conditions, the right experts collaborate to make the safest decision.

📈 Evolution by the Numbers

FSD V12: ~100–200M parameters

FSD V13: ~3–6× more

FSD V14: expected >1B parameters

Onboard hardware keeps pace:

HW3: ~72 TOPS

HW4: 150–200 TOPS

HW5: projected >1,500 TOPS

(TOPS = trillions of operations per second; more TOPS = faster thinking.)

🛡 The Safety Advantage Over Humans

AI has natural benefits:

Never fatigued or distracted

Faster reaction time (20–50 ms)

No road rage or emotional driving

Future vision:

Book a ride on your phone

A driverless Tesla arrives

It takes you to your destination safely and cheaply

🔮 From “Magic” to Science

FSD’s formula for success is straightforward:

Good Data × Large Models × High Compute × Strict Processes = Superhuman Driving

It’s no longer a matter of if full autonomy will happen, but when. Tesla’s journey from rigid rules to intelligent learning is reshaping how we think about mobility.

So, next time you see a Tesla drive itself, you’ll know exactly what kind of intelligence is working under the hood.

Please explore our blog for the latest news and offers from the EV market.